How to Block Bad Bots & Speed Up Your Site

Your website is like a coffee shop. People come in and browse the menu. Some order lattes, sit, sip, and leave.

But what if half your “customers” just occupy tables, waste your baristas’ time, and never buy coffee?

Meanwhile, real customers leave due to no tables and slow service?

Well, that’s the world of web crawlers and bots.

These automated programs gobble up your bandwidth, slow down your site, and drive away actual customers.

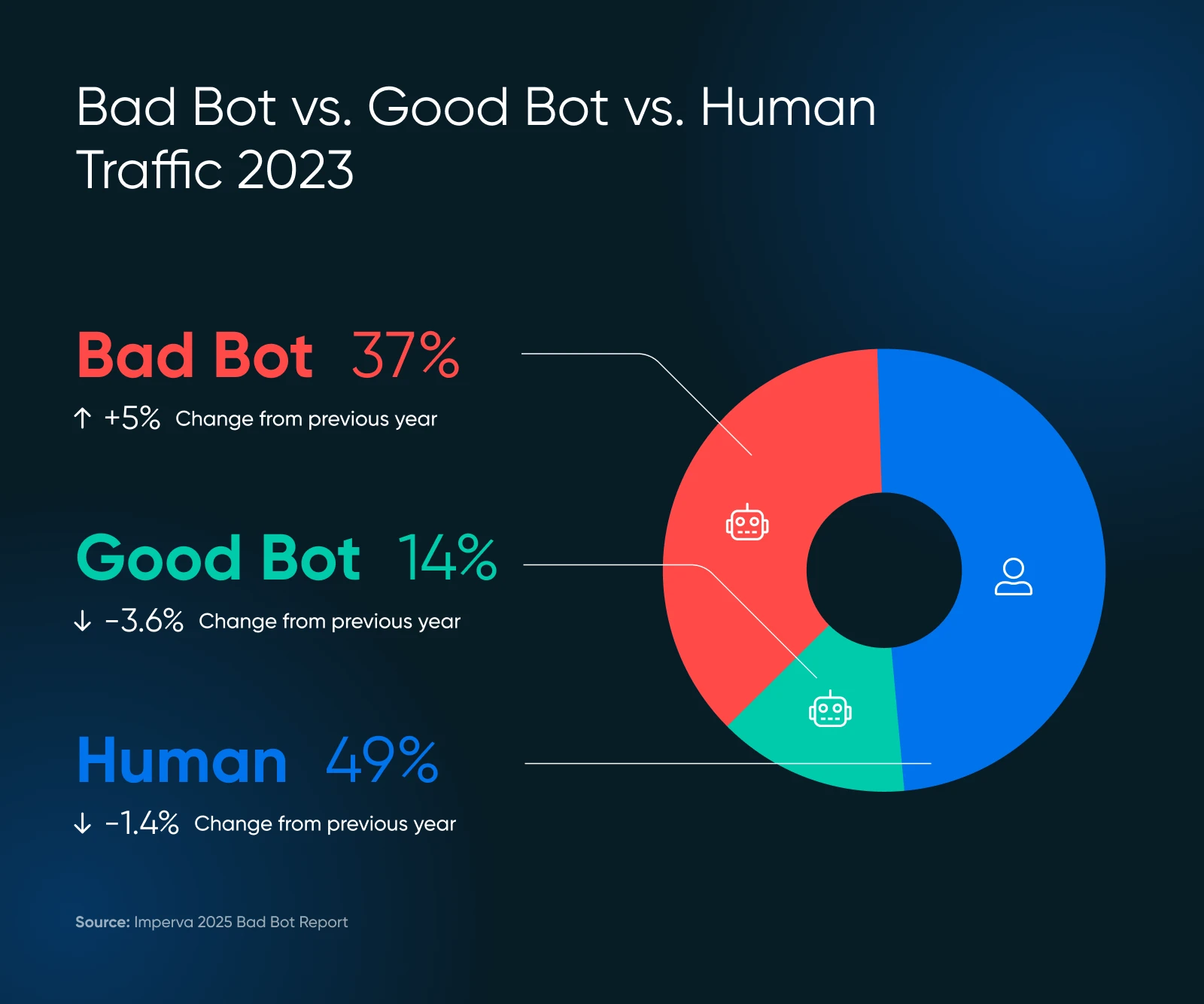

Recent studies show that almost 51% of internet traffic comes from bots. That’s right — more than half of your digital visitors may just be wasting your server resources.

But don’t panic!

This guide will help you spot trouble and control your site’s performance, all without coding or calling your techy cousin.

A Quick Refresher on Bots

Bots are automated software programs that perform tasks on the internet without human intervention. They:

- Visit websites

- Interact with digital content

- And execute specific functions based on their programming.

Some bots analyze and index your site (potentially improving search engine rankings.) Some spend their time scraping your content for AI training datasets — or worse — posting spam, generating fake reviews, or looking for exploits and security holes in your website.

Of course, not all bots are created equal. Some are critical to the health and visibility of your website. Others are arguably neutral, and a few are downright toxic. Knowing the difference — and deciding which bots to block and which to allow — is crucial for protecting your site and its reputation.

Good Bot, Bad Bot: What’s What?

Bots make up the internet.

For instance, Google’s bot visits every page on the internet and adds it to their databases for ranking. This bot assists in providing valuable search traffic, which is important for the health of your website.

But, not every bot is going to provide value, and some are just outright bad. Here’s what to keep and what to block.

The VIP Bots (Keep These)

- Search engine crawlers like Googlebot and Bingbot are examples of these crawlers. Don’t block them, or you’ll become invisible online.

- Analytics bots gather data about your site’s performance, like the Google Pagespeed Insights bot or the GTmetrix bot.

The Troublemakers (Need Managing)

- Content scrapers that steal your content for use elsewhere

- Spam bots that flood your forms and comments with junk

- Bad actors who attempt to hack accounts or exploit vulnerabilities

The bad bots scale might surprise you. In 2024, advanced bots made up 55% of all advanced bad bot traffic, while good ones accounted for 44%.

Those advanced bots are sneaky — they can mimic human behavior, including mouse movements and clicks, making them more difficult to detect.

Are Bots Bogging Down Your Website? Look for These Warning Signs

Before jumping into solutions, let’s make sure bots are actually your problem. Check out the signs below.

Red Flags in Your Analytics

- Traffic spikes without explanation: If your visitor count suddenly jumps but sales don’t, bots might be the culprit.

- Everything s-l-o-w-s down: Pages take longer to load, frustrating real customers who might leave for good. Aberdeen shows that 40% of visitors abandon websites that take over three seconds to load, which leads to…

- High bounce rates: above 90% often indicate bot activity.

- Weird session patterns: Humans don’t typically visit for just milliseconds or stay on one page for hours.

- You start getting lots of unusual traffic: Especially from countries where you don’t do business. That’s suspicious.

- Form submissions with random text: Classic bot behavior.

- Your server gets overwhelmed: Imagine seeing 100 customers at once, but 75 are just window shopping.

Check Your Server Logs

Your website’s server logs contain records of every visitor.

Here’s what to look for:

- Too many subsequent requests from the same IP address

- Strange user-agent strings (the identification that bots provide)

- Requests for unusual URLs that don’t exist on your site

User Agent

A user agent is a type of software that retrieves and renders web content so that users can interact with it. The most common examples are web browsers and email readers.

Read More

A legitimate Googlebot request might look like this in your logs:

66.249.78.17 – – [13/Jul/2015:07:18:58 -0400] “GET /robots.txt HTTP/1.1” 200 0 “-” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)”

If you see patterns that don’t match normal human browsing behavior, it’s time to take action.

The GPTBot Problem as AI Crawlers Surge

Recently, many website owners have reported issues with AI crawlers generating abnormal traffic patterns.

According to Imperva’s research, OpenAI’s GPTBot made 569 million requests in a single month while Claude’s bot made 370 million across Vercel’s network.

Look for:

- Error spikes in your logs: If you suddenly see hundreds or thousands of 404 errors, check if they’re from AI crawlers.

- Extremely long, nonsensical URLs: AI bots might request bizarre URLs like the following:

/Odonto-lieyectoresli-541.aspx/assets/js/plugins/Docs/Productos/assets/js/Docs/Productos/assets/js/assets/js/assets/js/vendor/images2021/Docs/…

- Recursive parameters: Look for endless repeating parameters, for example:

amp;amp;amp;page=6&page=6

- Bandwidth spikes: Readthedocs, a renowned technical documentation company, stated that one AI crawler downloaded 73TB of ZIP files, with 10TB downloaded in a single day, costing them over $5,000 in bandwidth charges.

These patterns can indicate AI crawlers that are either malfunctioning or being manipulated to cause problems.

When To Get Technical Help

If you spot these signs but don’t know what to do next, it’s time to bring in professional help. Ask your developer to check specific user agents like this one:

Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbot)

There are many recorded user agent strings for other AI crawlers that you can look up on Google to block. Do note that the strings change, meaning you might end up with quite a large list over time.

👉 Don’t have a developer on speed dial? DreamHost’s DreamCare team can analyze your logs and implement protection measures. They’ve seen these issues before and know exactly how to handle them.

Now for the good part: how to stop these bots from slowing down your site. Roll up your sleeves and let’s get to work.

1. Create a Proper robots.txt File

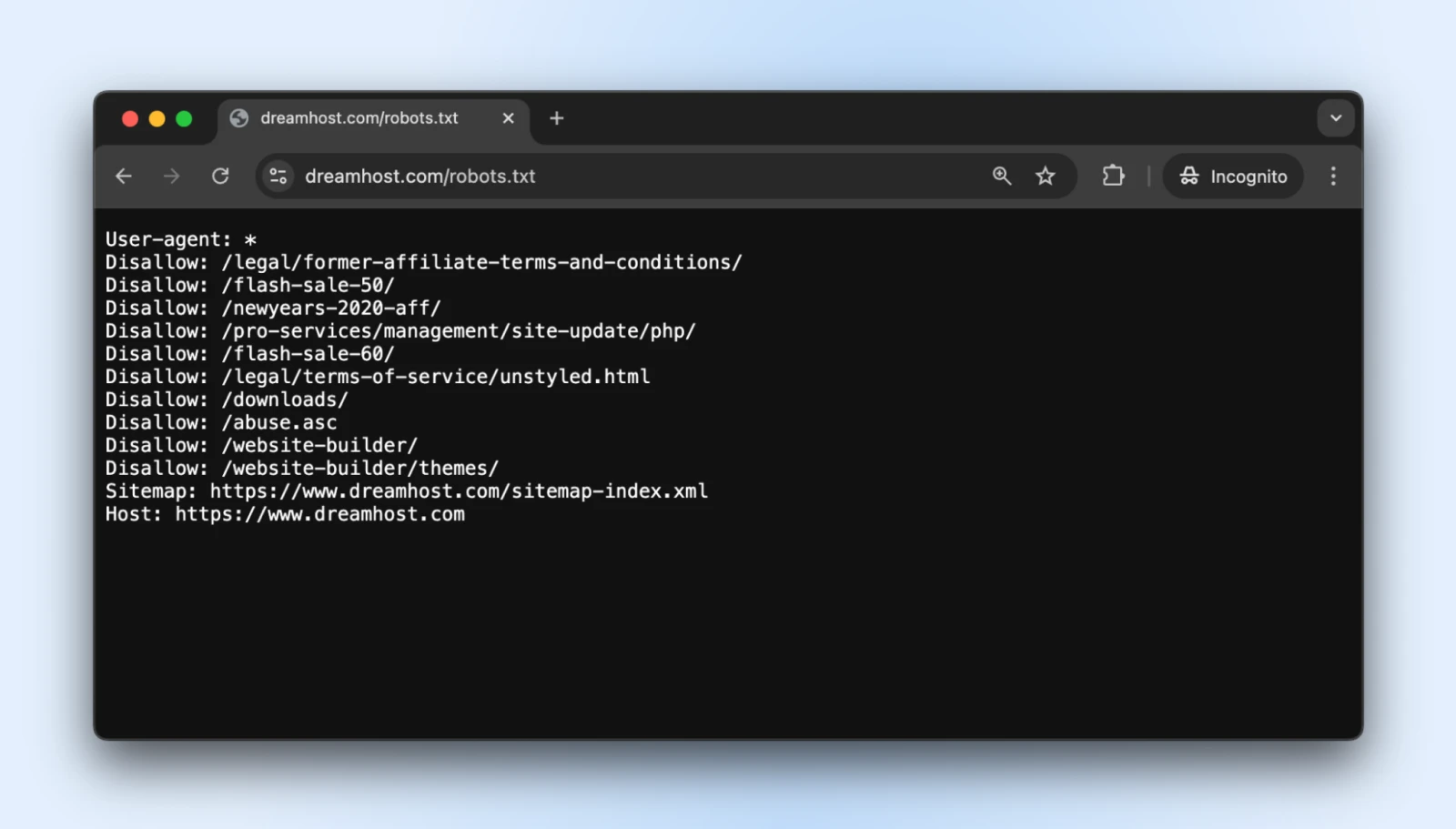

The robots.txt simple text file sits in your root directory and tells well-behaved bots which parts of your site they shouldn’t access.

You can access the robots.txt for pretty much any website by adding a /robots.txt to its domain. For instance, if you want to see the robots.txt file for DreamHost, add robots.txt at the end of the domain like this: https://dreamhost.com/robots.txt

There’s no obligation for any of the bots to accept the rules.

But polite bots will respect it, and the troublemakers can choose to ignore the rules. It’s best to add a robots.txt anyway so the good bots don’t start indexing admin login, post-checkout pages, thank you pages, etc.

How to Implement

1. Create a plain text file named robots.txt

2. Add your instructions using this format:

User-agent: * # This line applies to all bots

Disallow: /admin/ # Don’t crawl the admin area

Disallow: /private/ # Stay out of private folders

Crawl-delay: 10 # Wait 10 seconds between requests

User-agent: Googlebot # Special rules just for Google

Allow: / # Google can access everything

3. Upload the file to your website’s root directory (so it’s at yourdomain.com/robots.txt)

The “Crawl-delay” directive is your secret weapon here. It forces bots to wait between requests, preventing them from hammering your server.

Most major crawlers respect this, although Googlebot follows its own system (which you can control through Google Search Console).

Pro tip: Test your robots.txt with Google’s robots.txt testing tool to ensure you haven’t accidentally blocked important content.

2. Set Up Rate Limiting

Rate limiting restricts how many requests a single visitor can make within a specific period.

It prevents bots from overwhelming your server so normal humans can browse your site without interruption.

How to Implement

If you’re using Apache (common for WordPress sites), add these lines to your .htaccess file:

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{REQUEST_URI} !(.css|.js|.png|.jpg|.gif|robots.txt)$ [NC]

RewriteCond %{HTTP_USER_AGENT} !^Googlebot [NC]

RewriteCond %{HTTP_USER_AGENT} !^Bingbot [NC]

# Allow max 3 requests in 10 seconds per IP

RewriteCond %{REMOTE_ADDR} ^([0-9]+.[0-9]+.[0-9]+.[0-9]+)$

RewriteRule .* – [F,L]

</IfModule>

.htaccess

“.htaccess” is a configuration file used by the Apache web server software. The .htaccess file contains directives (instructions) that tell Apache how to behave for a particular website or directory.

Read More

If you’re on Nginx, add this to your server configuration:

limit_req_zone $binary_remote_addr zone=one:10m rate=30r/m;

server {

…

location / {

limit_req zone=one burst=5;

…

}

}

Many hosting control panels, like cPanel or Plesk, also offer rate-limiting tools in their security sections.

Pro tip: Start with conservative limits (like 30 requests per minute) and monitor your site. You can always tighten restrictions if bot traffic continues.

3. Use a Content Delivery Network (CDN)

CDNs do two good things for you:

The “irrelevant bots” part is what matters to us for now, but the other benefits are useful too. Most CDNs include built-in bot management that identifies and blocks suspicious visitors automatically.

How to Implement

If your hosting service offers a CDN by default, you eliminate all the steps since your website will automatically be hosted on CDN.

Once set up, your CDN will:

- Cache static content to reduce server load.

- Filter suspicious traffic before it reaches your site.

- Apply machine learning to differentiate between legitimate and malicious requests.

- Block known malicious actors automatically.

Pro tip: Cloudflare’s free tier includes basic bot protection that works well for most small business sites. Their paid plans offer more advanced options if you need them.

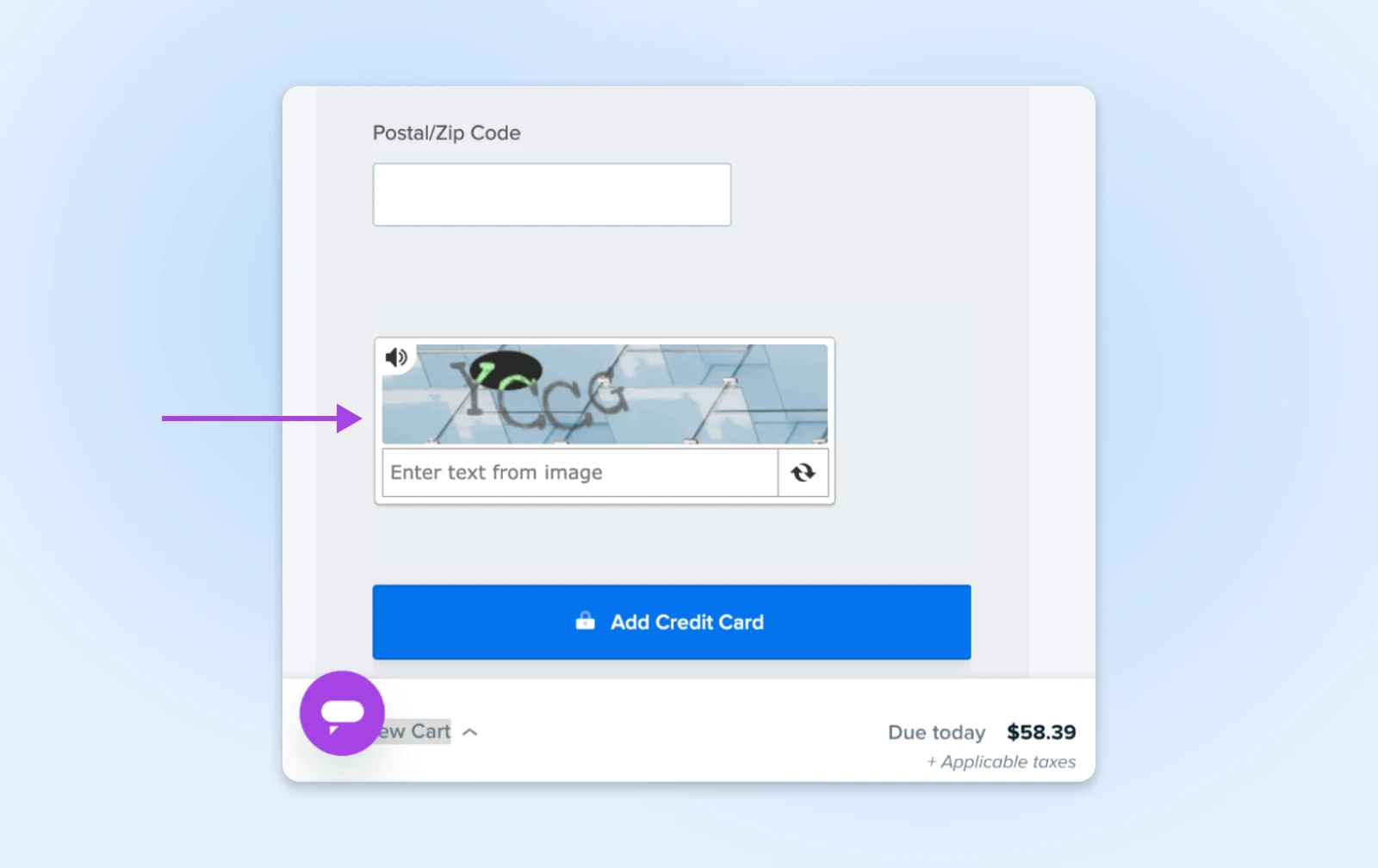

4. Add CAPTCHA for Sensitive Actions

CAPTCHAs are those little puzzles that ask you to identify traffic lights or bicycles. They’re annoying for humans but nearly impossible for most bots, making them perfect gatekeepers for important areas of your site.

How to Implement

- Login pages

- Contact forms

- Checkout processes

- Comment sections

For WordPress users, plugins like Akismet can handle this automatically for comments and form submissions.

Pro tip: Modern invisible CAPTCHAs (like reCAPTCHA v3) work behind the scenes for most visitors, only showing challenges to suspicious users. Use this method to gain protection without annoying legitimate customers.

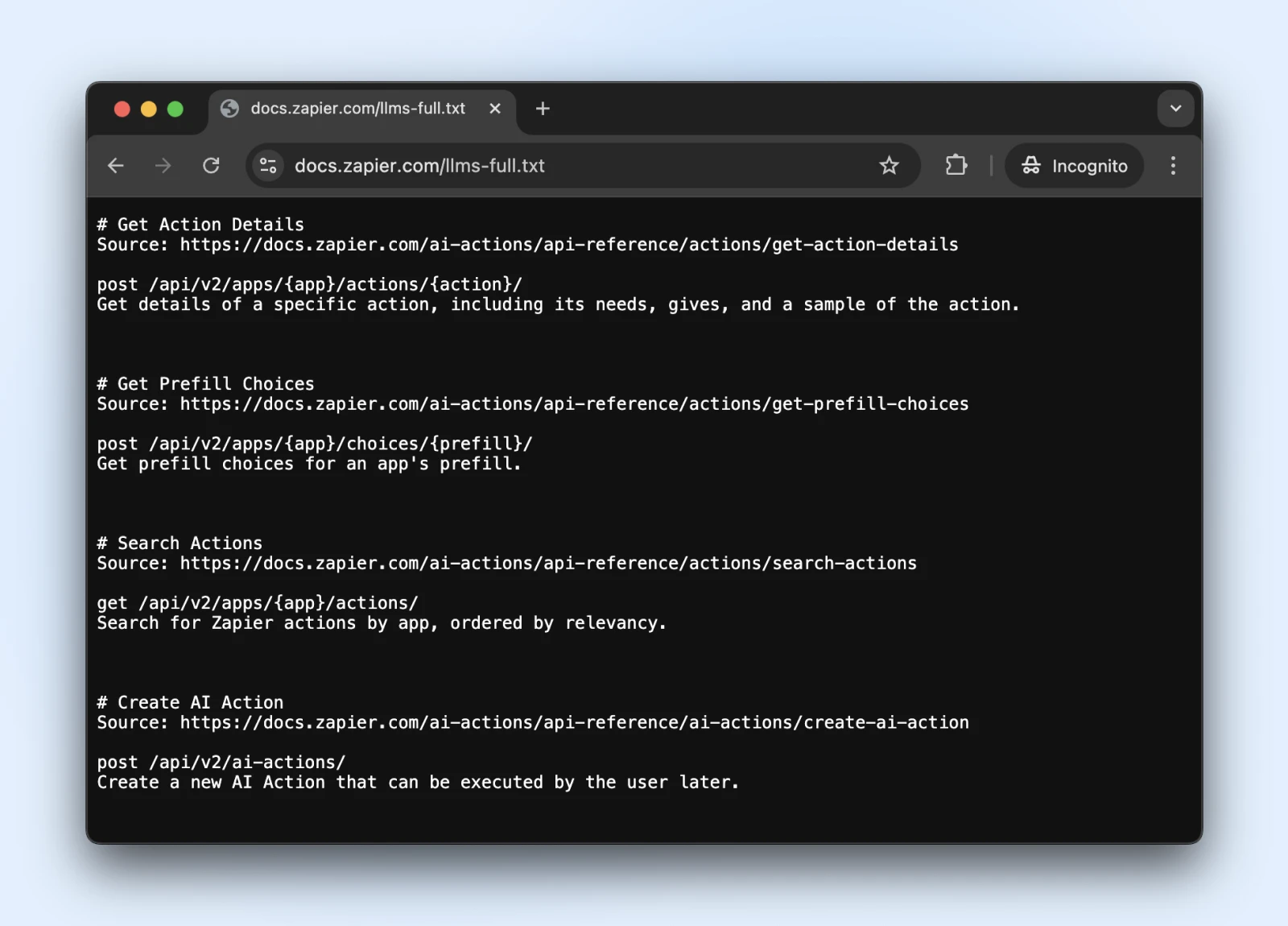

5. Consider the New llms.txt Standard

The llms.txt standard is a recent development that controls how AI crawlers interact with your content.

It’s like robots.txt but specifically for telling AI systems what information they can access and what they should avoid.

How to Implement

1. Create a markdown file named llms.txt with this content structure:

# Your Website Name

> Brief description of your site

## Main Content Areas

– [Product Pages](https://yoursite.com/products): Information about products

– [Blog Articles](https://yoursite.com/blog): Educational content

## Restrictions

– Please don’t use our pricing information in training

2. Upload it to your root directory (at yourdomain.com/llms.txt) → Reach out to a developer if you don’t have direct access to the server.

Is llms.txt the official standard? Not yet.

It’s a standard proposed in late 2024 by Jeremy Howard, which has been adopted by Zapier, Stripe, Cloudflare, and many other large companies. Here’s a growing list of websites adopting llms.txt.

So, if you want to jump on board, they have official documentation on GitHub with implementation guidelines.

Pro tip: Once implemented, see if ChatGPT (with web search enabled) can access and understand the llms.txt file.

Verify that the llms.txt is accessible to these bots by asking ChatGPT (or another LLM) to “Check if you can read this page” or “What does the page say.”

We can’t know if the bots will respect llms.txt anytime soon. However, if the AI search can read and understand the llms.txt file now, they may start respecting it in the future, too.

Monitoring and Maintaining Your Site’s Bot Protection

So you’ve set up your bot defenses — awesome work!

Just keep in mind that bot technology is always evolving, meaning bots come back with new tricks. Let’s make sure your site stays protected for the long haul.

- Schedule regular security check-ups: Once a month, look at your server logs for anything fishy and make sure your robots.txt and llms.txt files are updated with any new page links that you’d like the bots to access/not access.

- Keep your bot blocklist fresh: Bots keep changing their disguises. Follow security blogs (or let your hosting provider do it for you) and update your blocking rules at regular intervals.

- Watch your speed: Bot protection that slows your site to a crawl isn’t doing you any favors. Keep an eye on your page load times and fine-tune your protection if things start getting sluggish. Remember, real humans are impatient creatures!

- Consider going on autopilot: If all this sounds like too much work (we get it, you have a business to run!), look into automated solutions or managed hosting that handles security for you. Sometimes the best DIY is DIFM — Do It For Me!

A Bot-Free Website While You Sleep? Yes, Please!

Pat yourself on the back. You’ve covered a lot of ground here!

However, even with our step-by-step guidance, this stuff can get pretty technical. (What exactly is an .htaccess file anyway?)

And while DIY bot management is certainly possible, you mind find that your time is better spent running the business.

DreamCare is the “we’ll handle it for you” button you’re looking for.

Our team keeps your site protected with:

- 24/7 monitoring that catches suspicious activity while you sleep

- Regular security reviews to stay ahead of emerging threats

- Automatic software updates that patch vulnerabilities before bots can exploit them

- Comprehensive malware scanning and removal if anything sneaks through

See, bots are here to stay. And considering their rise in the last few years, we could see more bots than humans in the near future. No one knows.

But, why lose sleep over it?

Pro Services – Website Management

Website Management Made Easy

Let us handle the backend — we’ll manage and monitor your website so it’s safe, secure, and always up.

Learn More

This page contains affiliate links. This means we may earn a commission if you purchase services through our link without any extra cost to you.